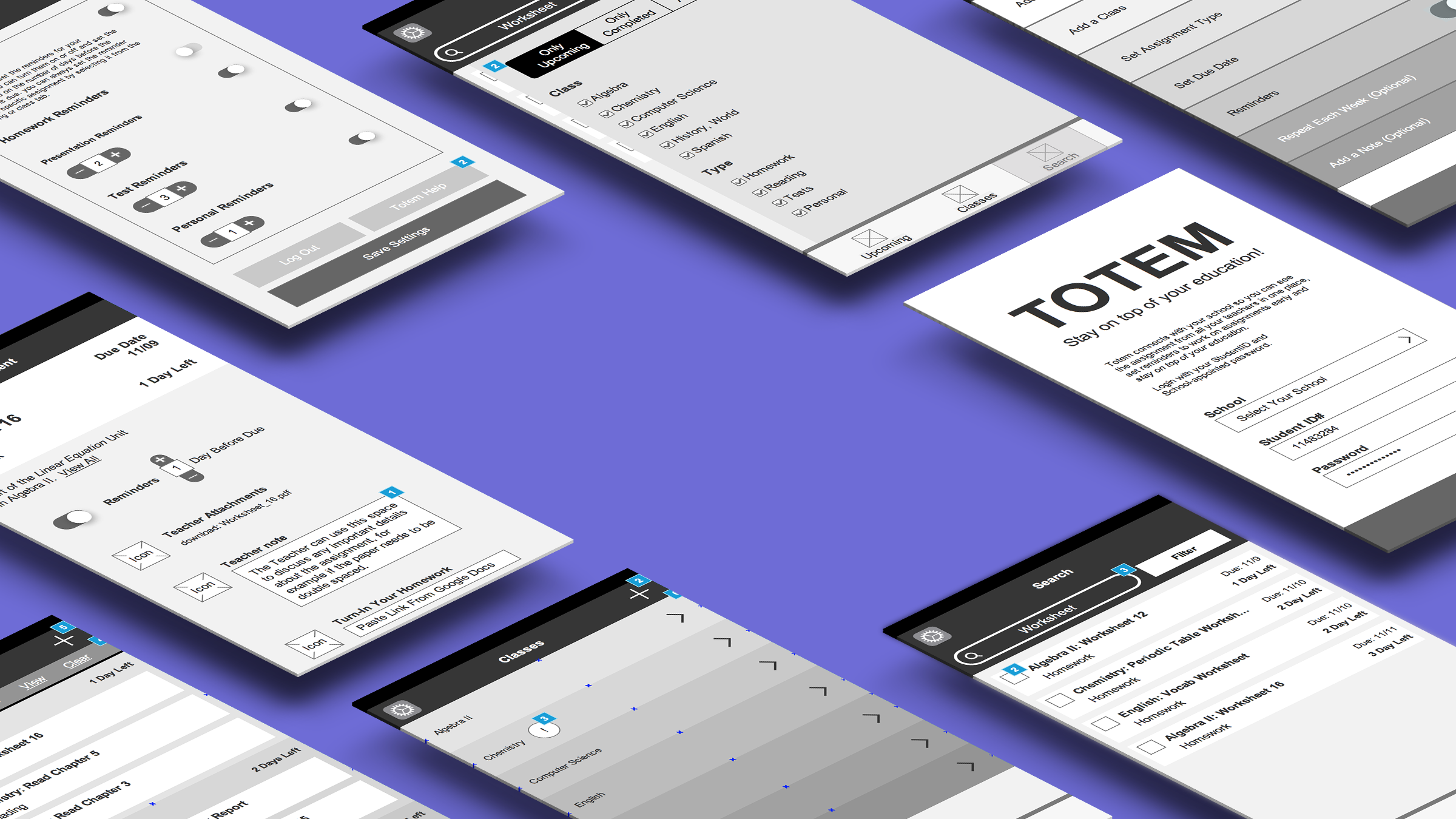

Jibo Snap

Product Design

-

Role

Lead Designer

UX Design

Conversation UI

-

Design Challenge

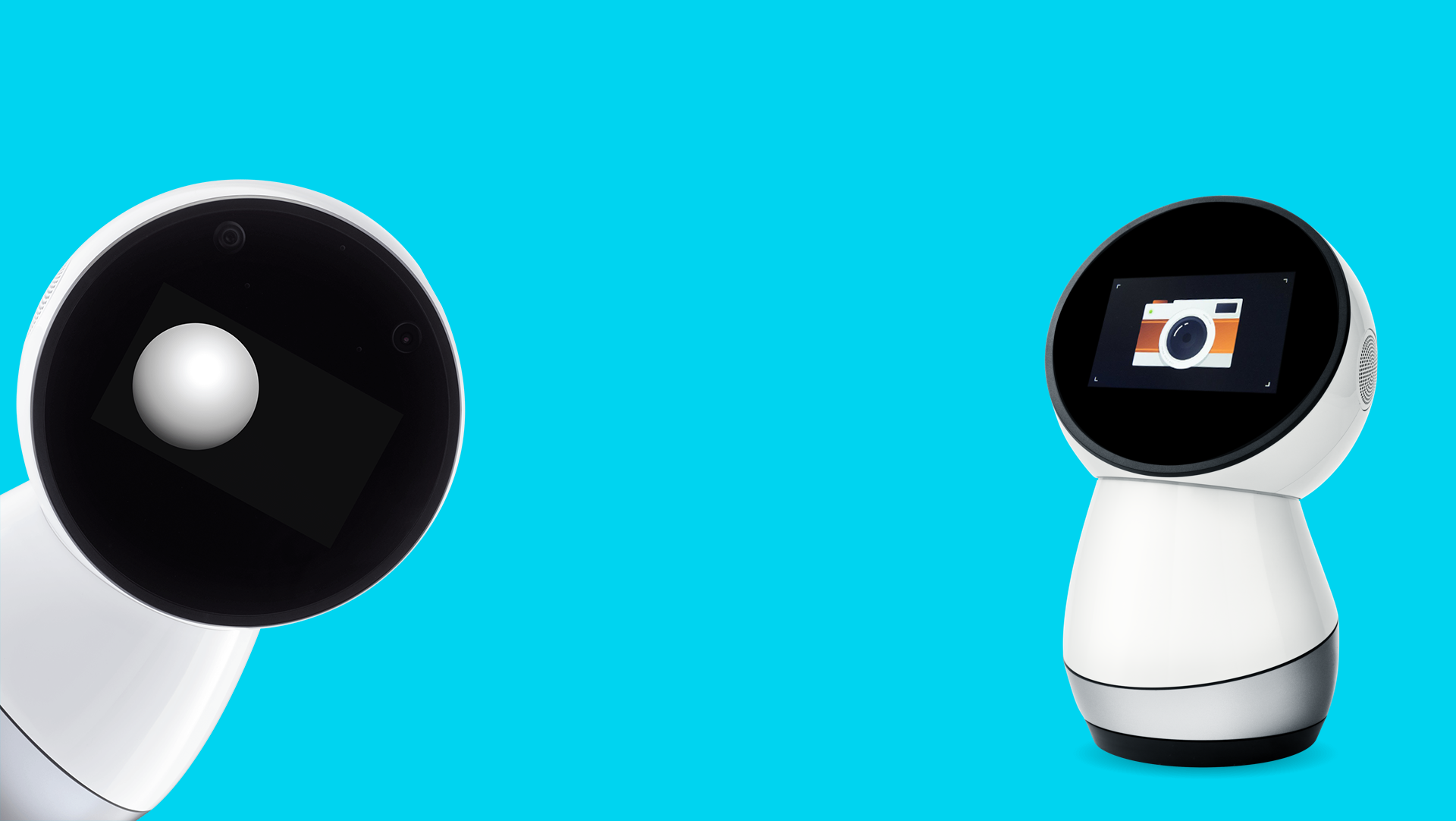

Design a conversational interface that would help a robot with a camera frame up and take a photo a user would want to keep and share.

-

Tools

Google Slides

Lucid Charts

Adobe Animate

Brief

One of Jibo’s first skills to be shown in the original Indiegogo campaign was his ability to take pictures. As a design principle, we wanted to make Jibo appear creative and smart so it was important that he navigate as much of the photo-taking process on his own as possible. This meant leveraging computer vision to know when people, and faces in particular, were in frame. He also uses sound localization to pivot his body to where he believes people are as a starting point. With these tools and prompts from Jibo himself, we turned Jibo the robot into a photographer.

Conversation Tree

Jibo Snap is triggered with a simple command to take a picture or equivalent. From there, the bulk of the process is handled by sound localization and computer vision. The goal of the happy path is to have Jibo do the vast majority of the work. If he can find a face quickly and frame the picture he will just take it (as shown in the videos below). If the process is not as clean he will be more talkative and provide more feedback to the user. As Adam Savage would say, “Failure is always an option,” so he has to fail gracefully when it happens. If he is unable to find a face after 60 seconds of searching and additional prompts he will simply show you what he is looking at and ask if you want to take that photo.

Final Interaction

In the final interaction we included a few extra pieces of user feedback to be as clear as possible how confident Jibo was in taking the photo. In most cases he is very confident, finds people immediately and snaps the photo. Testing showed that the inclusion of corner frames and displaying the viewfinder were most effecting in getting people to stand still and ultimately get a photo they would keep. Beta testing with the robot in peoples homes showed this skill was very popular among children so significant extra tweaking was done to make sure Jibo would check lower to the ground and give kid friendly instructions to get the best photos.

Credit to Heather Mendonça and Kim Hui for the original camera illustration and animation respectively. In order to adjust the timing and user feedback I adjusted the original animation and added the frame corner elements.

Learning and Growth

This was my first time working on a conversational interface and leveraging computer vision to capture user input. To get this exactly right myself and the lead developer, Bard McKinley, spent hours role playing as different users. We also did more user testing on this feature than any other launch skill to make sure that Jibo appeared smart to our end users.